I’ve started the next Xbox 360 laptop and it’s going to use the Elite guts, allowing for a digital video connection among other things. I was very curious to see what changes, if any, were made inside the unit to account for the infamous “Red Ring of Death” problems. What I found was reassuring, at least for anyone buying the Elite, and provided some clues as to what might be happening in the defective units.

So for a guided tour in classic Ben style click below. I made the pictures nice and big because I personally can’t stand it when I try to find out what something looks like inside only to find postage-stamp sized pics that were probably taken with a 1997 Mavica.

The Elite came apart pretty much like any other 360 I’ve gotten my mitts on. The metal cage revealed by opening the bottom of the unit looked a little different, at least from what I can recall. More smaller holes instead of fewer large ones.

The bottom of the unit.

Face plate area is also the same, complete with “Don’t break the seal” thing telling MS if you’ve gone in for a swim.

The new 3 year warranty… voided in seconds with merely an X-Acto knife.

After remembering how the clasps in the rear are undone (simply insert a thin screwdriver into each of the slits) I popped open the first half of the case. Inside I found a bit of plastic dust, for lack of a better term. Never really seen anything like this before in a console. Perhaps it’s a new feature?

Comes with free plastic dust. Do not use near cribs or asthmatics.

Below is the unit thus far. Quite obvious is the new model DVD drive and the heat pipe “extension” for the GPU’s heat sink. I’ve seen some photos of these being added to fixed units, in France or something, but I guess they’re putting them in the Elite as well. Good show MS. Now get those 65nm IC’s all up in this.

A BenQ drive… hm. I believe before their were either Hitachi LG or Toshiba. Perhaps this drive is quieter, well, it couldn’t be any louder I suppose. On that note, I don’t think the drive is loud because it’s a piece of junk or anything, it just spins the disc really fricking fast. I know, I’ve run them with the drive open before and it actually scared me. You’d half expect it to fly out of the drive and stick into the wall – better call Mythbusters and have them check it out with some ballistics gel.

I don’t believe I’ve seen this brand of DVD-ROM in a 360 before.

The drive isn’t held in place by anything, just the case. I believe this is called “mechanical retention”. I guess whatever works.

The DVD drive is held in place by mechanical retention / prayer.

Left- old jet engine decibel drive. Right – newer not quite as loud drive.

While the new drive looks thinner it actually isn’t when you include the top “UFO” portion.

The drives side-by-side.

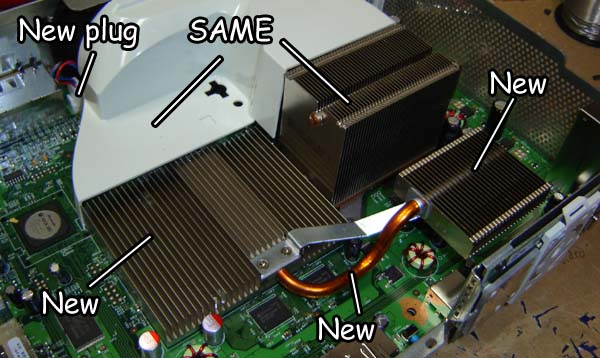

Here’s a good view of the heat sink area. The CPU ‘sink is the same as before, because we all know the problem was with the GPU. Now not only is there a heat pipe going over the GPU but the material the heat sink is made out of seems different as well – it doesn’t feel like aluminum. (Originally it was a fairly generic aluminum heat sink that would honestly look more at home in a 486.) Maybe I can get NASA to scan it for me, or maybe who cares as long as it works?

Heatsink area, with easy-to-follow labeling system.

This photo also kind of shows how the XBox cooling is a bit weak. There’s no fans directly on any of the components, just 2 large ones at the back of the case and a “tunnel” to pull hot air from the chips. This would be akin to having only a rear fan on your PC and nothing on the CPU or graphics card.

In comparison the PS3, which I may or may not have opened and worked on, has a huge fan which pulls air from the rear and ejects it out the top (or right side if laid flat – is it just me or does the PS3 looks stupid sitting on end?) The air goes through a very large system of heat pipes and radiators along the way and is fairly similar to what’s probably on a Geo Metro. It’s very ridiculous looking when you first see it but then again we never hear about massive failures on the PS3 do we? Of course this could be because a mass of people haven’t bought them yet… But I digress, back to the 360 Elite:

Below is an overview of the motherboard. It’s very similar to the original but seems more optimized. Thinner capacitors have been used, which is probably why the board looks more bare. The HDMI/AV output plug is very weird… it has the same pin-out for the old A/V plug, but the through-hole connections are split in half on the bottom of the board. The first 15 pins are on one side, there’s a gap in the middle and then the remaining 15 pins are on the other side. This gap probably indicates where there’s a surface mount connection for the HDMI on the top of the board. Weird.

A top-down shot of the entire motherboard, with only some flash reflection wrecking it.

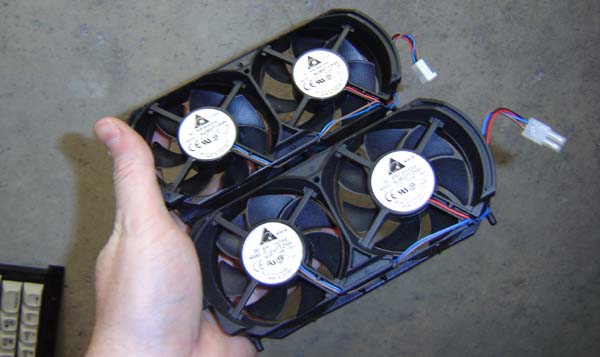

The Elite’s fan and a standard 360 fan. Pretty much the same, only the plug has changed. I did notice the fan seems to change speeds a little bit, but maybe I was imaging things.

Can you tell a difference? Only the Shadow knows,because apparently Alex Baldwin is Chinese?

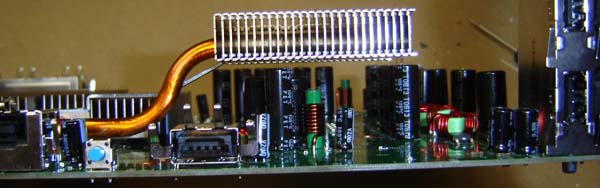

Below is an arrow indicating airflow. You can see how the heat that travels up the pipe from the GPU is intended to go into the mini radiator and be in the same “airflow line” as the CPU. It’s too bad there’s a pesky wall of metal and the Ring of Light blocking things to the right of this. Oh well, the 360 probably wouldn’t look very cool if the front of it was covered with holes. But it’s probably run cooler that way.

This arrow allegedly represents air.

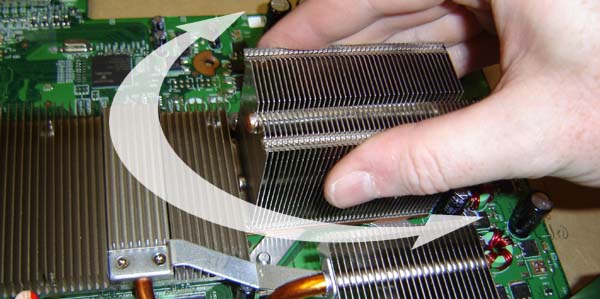

I then proceeded to pull off the heat sinks. Immediately I noticed that they had some wiggle to them, kind of like a PC heat sink usually does. The older 360’s heat sinks were quite well locked in place, and I’m not talking about the X clamps on the bottom either. The thermal paste on the older boxes was quite hard and you had to literally “break” the heat sink off the chips.

Twist, and then shout if you bust it.

True enough, once I pulled them off and had a look, the thermal paste on the heat sinks was much – for lack of a better word – GOOEY-ER than it used to be. Perhaps this allows for more expansion and thus a more consistent connection between the die and the heat sink. But I’m no brain scientist (for 50 points what movie was that term in?) so it’s hard for me to say for sure.

Here’s a side view of the GPU’s extended heat sink. It just barely clears the capacitors.

This thing looks cool, yet tacked on at the same time – much like Rachael Taylor’s character in Transformers.

On the bottom of the board we once again have the thermal pads under the memory that sink the heat into the RF shielding cage.

Thermal pads under the memory, same as before.

OK here’s the best surprise of all. Framing both the GPU and CPU are bits of hot-melt glue, or perhaps a type of epoxy resin. This is one of the oddest things I’ve seen in an electronic device, and I’ve opened an Intellivision before!

The GPU. Apparently Microsoft loves hot glue as much as I do. Not that I blame them…

This would be a good thing for you all to discuss in the comments. Does the glue keep the IC from drifting? Does it align the chip better for when it bakes, when they melt the BGA (ball grid array) solder points on the bottom? You’d think the chip couldn’t really drift once the unit is assembled as the heat sinks are clamped down onto it. But then again, these heat sinks aren’t held firmly in place by the thermal paste, so maybe drifting is some remote possibility and they’re hedging their bets with this glue.

The CPU. More of this mystery glue.

It was time for me to test this glue substance. I pulled out all the stops and performed the most advanced analysis possible. First I did what any toddler would try and chewed on it. Its consistency was similar to hot glue but much stronger, so if it is hot melt it probably requires a higher temperature than the glue gun I bought for at Wal-Mart for 2 bucks. (Hopefully it melts at more heat than what the chip can pump out during a Gears of War marathon)

Regardless, I am quite impression by the precision CNC controlled gluing they have accomplished here and I wish I had a machine capable of that for my own. A hot glue robot – just think of the possibilities.

Near aerospace quality spectrometer testing being done on the glue, using the latest equipment in our state-of-the-art facility that doubles as a townhouse basement.

Well that’s about all I have to say about the Elite at this time. In short it looks like they’ve taken steps to improve the cooling of the GPU, even if the “glue frame” leaves us scratching our heads. If you’re on the fence about getting a 360 because of the “ring of death” reports, I’d say go for it anyway, especially if you can afford an Elite since it seems to have been updated. I wouldn’t be surprised if newer Cores and Premiums are improved as well. Now if only Microsoft would include built-in WiFi – maybe when the 65nm chips hit, which should also end the cooling problems for good – then they could truly stomp the PS3 into dust.

Can’t wait to see it 😉

Perhaps the glue doubles as a frame to make the IC look more appealing?

Great in depth look Ben! Kudos!

One thing though on your closing comment:

“maybe when the 65nm chips hit, which should also end the cooling problems for good”

When you decrease the line width of your chip process you don’t decrease the running temperature of the part. Actually, you tend to increase running temperature. Think of it this way. When you talk about the line width you are actually talking about the minimum size you can make precision parts. The biggest savings electrically is seen on the reduction in the size of the “FET fingers” … or for the non-engineers, the sliver of metal that allows transistors to work. Transistors (FETs) are used primarily to open and close an electrical “gates” or “switches” at an extremely fast rate (GHz by todays standards) to pass a specific current (usually biased to rail voltage in digital CMOS) to the next part of the chain. If you are looking for identical functionality of your chips, you need to have the same electrical point-by-point characteristics present between the two chips your comparing. So, by keeping all things but line width the same, you have less area overall to dissipate your heat. Thus, parts with which will leave a nice blister on your finger after momentary contact.

If MS was going to go to a 65nm process to produce a newer version of the Xbox 360 effectively, they would need to completely revamp the architecture and clocking internally as well as pay their outsourced digital design engineers another 6 months of consulting fees. At that point all comparisons are off other than the fact that potentially, the same result is met (a.k.a, the thing actually works as intended). With the new architecture would almost certainly come new “tricks of the trade” to keep the heat monster at bay such as the addition of “dummy structures” and “uniform metal distributions.”

And just think, 40nm is just around the corner … with all the headaches that go with it.

Hope that didn’t get too technical …

Keep up the great mods, they are truly an inspiration!

I believe the glue was so the heatsinks wouldn’t bend the board. It’s kinda hard to explain.

How much do you think the pricing will be on one of these?

Not even Scooby Doo? Preposterous! Anyways, I’m wondering if M$ changed the cheese grader for efficiency or cost….

the hot glue is to keep the awesome from flying out of the case. i know it from my science studies.

Great article, Ben!

Thanks for providing some insight into the great Red Ring of Death mystery.

I don’t think the heatsink would bend the board and it touches the top of the die, not the main surface of the chip.

RE: Matt, that’s strange what you said about the 65nm chips. When I built my PC I noticed the 65nm Intel stuff tended to require, on average, about half the wattage of comparable 90nm AMD stuff.

I understand what you mean about shrinking parts and more heat (because there’s more resistance – fall of man) but I was of the understanding heat and power reduction was a major feature of 65nm things.

It’s been widely reported that MS is doing a 65nm rework of the 360 that’s to arrive this year. A lot of people were miffed that the Elite didn’t use it.

I just read this interview which was quite interesting…

http://www.joystiq.com/2007/07/13/engadget-and-joystiq-interview-peter-moore-head-of-xbox/

…but the RROD problem is fairly obvious to anyone who’s opened a 360 or ran it open – the GPU is not sufficiently cooled, and under the DVD drive to boot.

the Mythbusters actually did do a test on the spinning disks inside PCs and if any harm could possibly be done…oh was busted by the way.

seems kool so i pre-ordered 1

Hey Ben. Great article, been waiting to see the insides of an elite for a while now. The deal with the 65nm chips, they do run cooler on average because the smaller the part, the better the heat dissipation. So in theory, it could put out more heat energy, but still run cooler because that energy bleeds away faster.

ben, do u think the grafx r better on the elite or the old 360 or is there no cchange?

Still no chip smashers? 🙁

😉

Does the laptop part work??

Yea Ben, You have to find out when M$ is going to start but the chip smashers into the 360s. You did a great job with this though. I really hope to see the finished portable.

btw MGS4 is not coming out for 360

360 isn’t as good as you think it

30% failure for their consoles ahahaha

Kaynes a PS3 fangirl!

Very Nice Ben, You should send someone the case so they can put it on a normal 360! 😛

Sounds very nice, cant wait to see it finished.

Btw can i have the case and faceplate from very lucky xbox?

Nope, no chip smashers in the Elite either. Maybe the next model?

Any thoughts on E3 and the future of videogaming?

The 360 laptop was nice and the article on how you made it and such was pretty interesting, hopefully the Elite will turn out to be an elite success. Kudo’s!!

@ Ben

You hit the nail on the head. Your 65nm Intel is working at half wattage. That means that rail voltage for the chip was significantly decreased and hence current can be much lower and less heat is made. That does not correlate to the heat dissipation. All that means is Intel, with their genius minds, created a chip that does the same thing as the 90nm chip with lower electrical values more efficiently.

For example, I heard from an semiconductor industry professional at a conference in 2004 that the first P4’s that were put in notebooks were only ~50% efficient. That means, 1/2 the energy goes to computing and 1/2 goes to heat. If you can’t dissipate the heat (which many of the first P4 notebooks couldn’t) the heat has to go somewhere … usually, because of the CPU placement and sub par heat transfer on the early models, a very toasty lap.

To sum it up, going from a 90nm -> 65nm redesigned chip:

Decreased power … absolutely

Decreased heat generation … likely (depends on the efficiency of the chip)

Increased heat dissipation (on chip) … no, theres ~1/3 less surface area to skim what’s created.

The decreased heat that could be seen is merely a result of more efficient ways of computing information. That’s not to say it won’t be an issue though … if not properly controlled, just like any other electrical part, there will be the same issues. Although, I find this very unlikely for a 2nd pass release. After a few internal revisions, the bugs are usually all figured out.

@Raven

Actually, the exact opposite of what you said. Read above.

Nice article, always a pleasure to read your stuff.

I was surprised to see a warning printed in finnish on the BenQ drive, as it is quite a minority language by european standards, and the unit was made for the US market.

Hah, I actually met the guy who ordered this thing. He’ll be pleased. His name’s “Dan”.

Why you gotta be hatin on the finnish?

Ever thoughht about a Ps3 80gb or 60gb Laptop maybe?…..obviously if u had someone to gieb u the money.

Matt: They only decrease the actual Transistors, not the entire Chips, the Core 2 Duos die under the IHS is about as big as my Athlons was.

And 65nm Runs cooler than 90nm or 130nm, because of improved architectures, etc.

Ben

Is there anyway to use the video connector on a normal 360 and convert it to HDMI? If so could you show us?

I think maybe the glue is a safe-guard against all the heat. Things like to warp when they get hot. Warping could threaten the integrity of your connections… I dunno, the stuff I work on is much bigger (forklifts).

We use goopy thermal compound. We use that because it will get into all the nicks and crannies thereby allowing effective heat transfer. But I suppose you might use one that also has a glue-type effect if there’s no way to bolt it down…

Nice article.

Ben,

What was the date this elite was produced. I know the launch elites did not have that added heatsink. So some of us are trying to figure out when MS started adding them.

the Epoxy glue is to keep the chips edges from lifting off the board. Before the heatsink redesign, too much built up heat would cause the mainboard to warp and the GPU’s corners would lift (ever so slightly) breaking contact with the solder points.

@Ben

are you considering making another book? I’d buy it in a heartbeat.

Great news Ben! Only waiting for Microsoft reducing the price and then the Xbox also may suit for me.

Great news ben! Only waiting for Microsoft reducing the price and then the Xbox also suits for me.

Wow, what a 360 fanboy…

Nice article though.

Benheck seems to be just an xbox 360 fanboy, what a shame coming from someone who should understand how the things work. Besides, this article is pretty informative.

Strange glue, I cant believe its epoxy base, if you burn epoxy it produce a sweet smell, does it? It looks like some PhaseChangeMaterial with a defined melting point. Ussualy for PC 113F is used, I kown there a higher available. PCM are also used as a grease mixed by a thinner which baking off. The best cooling is reached during liquid phase.

The GPU heatsink looks like the work around MS use for repairs.

I have done some tests with the former 360 6 month ago as a preproject. The input power of 140W for so small box is hard. The airflow is very rare, I messured 7 CFM @5,45V (during all tests) and 15 CFM @12V hypothetical possible. I only estimated the flow over GPU to 20%…. I don’t tell more about. I think the engineers have all well calculated.

Strange glue, I cant believe it’s epoxy base, if you burn epoxy it produce a sweet smell, does it? It looks like some PhaseChangeMaterial with a defined melting point. Ussualy for PC 113F is used, I kown there a higher values available. PCM are also used as a grease mixed by a thinner which is baking off. The best cooling is reached during liquid phase.

The GPU heatsink looks like the work around MS use for repairs.

I have done some tests with the former 360 6 month ago as a preproject. The input power of 140W for so small box is hard. The airflow is very rare, I messured 7 CFM @5,45V (during all tests) and 15 CFM @12V hypothetical possible. I only estimated the flow over GPU to 20%…. I don’t tell more about. I think the engineers have all well calculated.

RE me being a fanboy, last generation I was all about the PS2, before that, the N64. I thought the Xbox 1 was basically an ugly POS. To go back further I was Genesis>SNES… So I definitely blow with the wind, and never stick to liking one company over another. Only recently did I start to dislike Nintendo, I thought the Gamecube was swell.

I’ve been impressed with the 360 and despite the flaws (which are probably exaggerated by the press like all things) it seems like the best bet this coming generation due to the number of games, price, and the Xbox Live X-perience. And with the price seemingly now officially dropped, super warranty, there’s more reason than ever to “Jump In” People complain about being on their 3rd or 4th 360… but notice they’re still playing it and haven’t given up? That should speak volumes.

Plus I am pushing the Xbox because I truly believe if the Wii won it would be bad for gaming in the long run. A person can pay $60 for a AAA $20 million game (like Lost Planet or GTA4) or $50 for a cheapo collection of minigames that was probably done in somebody’s garage. Or $40 for a game that’s 5 bucks on XBLA. What’s a better value for the consumer? Plus the Wii’s 3rd party attach rate is abysmal, so all it helps is Nintendo, not 3rd party developers.

What is the production date of the system?

Hey Ben, thanks for such a detailed insight into the new 360. I was just wondering, what does the MFG date, the Lot Number and the Team Name say on the box for that sucker? Thanks.

Oh, and call me crazy, but if you stood the system up on it’s side wouldn’t that render the additional heat sink useless? Heat rises and that puts the additional heat sink BELOW the heat source

Interesting article, though I was amused by some of the comments. Matt’s comments regarding heat dissipation and process shrink were, I thought, a bit confusing (though not really “wrong” per se), so here are my thoughts and amplifications of Matt’s commentary.

First, there are a few reasons to shrink the process size for a chip design. One reason is to create parts that can be clocked faster — because smaller transistors switch faster, and because shorter signal paths inside a chip mean lower latencies. However, the Xbox 360 isn’t ever going to ship with faster chips because the general rule with game consoles is to keep the architecture as close to the same as possible, so the developers don’t have a “moving target” to shoot for.

Another reason to process shrink a chip design is economic: You can fit more chip dies on a wafer if the chip die can be shrunk. (Incidentally, Brutuz’ comment is not 100% correct. His comparison is flawed, because he’s comparing two different chip architectures. Intel and AMD both have a habit of increasing cache size when they do process shrinks, which may keep the die size about the same or may even grow the die size, depending. Increasing cache means increasing transistor count.)

Another reason to process shrink a chip design is that you can improve a chip’s performance without necessarily increasing clock speed, typically by increasing cache size. (See my above parenthetical comment.) In this case, you’ve got a bigger “real estate” budget for your die, because you can fit more transistors on the same amount of silicon. SRAM is entirely composed of transistors (no capacitors holding bits, so no refresh required). So the chip itself might not shrink, but instead, the transistor count goes up because the cache size increases.

And last, but not least, one reason to process shrink a chip design is to reduce power requirements. This is because smaller gates require less voltage swing to get them to switch. If you keep the transistor count the same and shrink the process to create a chip, the power requirements tend to go down. The limiting factor on this is leakage current — smaller process chips tend to leak more current, which is purely dissipated as waste heat. (Go look up “chip leakage current” on Google, or check out http://www.eetimes.com/conf/isscc/showArticle.jhtml?articleId=18308042&kc=3681 which has a good summary of the problem and ways to solve it.) Exotic high-k gate insulators and other engineering work-arounds are needed to avoid this problem.

Small gates tend to require less current as well as less voltage (and Watts = Volts * Amps, as you may recall from basic EE), so keeping a lid on leakage current is paramount if you want to reduce the thermal design power for a chip. Yes, the chip may get smaller, which reduces the surface area for heat dissipation (something Matt was careful to point out), but improved heat sink materials with better thermal transfer properties (e.g., copper instead of aluminum) can alleviate that problem. Also, most modern chips incorporate heat spreaders to increase the effective surface area of the chip (and the contact area with the heat sink).

If the engineers at IBM are careful, the 65 nm version of the Xbox 360 processor should indeed have a lower thermal design power. But my guess is that the main motivating factor behind the process shrink is production cost, not improved reliability. As to why I mention IBM — they’re the folks who designed the Xenon CPU, and who manufacture it for Microsoft. ArsTechnica has an excellent article at http://arstechnica.com/articles/paedia/cpu/xbox360-1.ars for those who are interested.

Weird… my comment didn’t appear to post correctly…

I mean the way you talk about the ps3, not exactly wii, you can tell a lot of things of xbox 360, but you shouldn’t try to compare the cooling system of the 360 and the ps3, sorry if i bother you, i really like your articles.

IDK…i own a PS3, and would not consider purchasing a 360 for at least another 3-4 months, but i liked the comparison to a Geo Metro and the PS3 cooling system. I’ve never had a problem with overheating, and my system has basically been on since i bought it. Maybe Microsuck should take some ideas from Sony.

the glue is there because when the board heats up it will warp and the bga will lift from the pads. the glue HELPS, but doesnt compleatly stop the lifting of the chip – so when the board does warp – it will wont affect the bga as much.

I cant tell from the pictures but this is probably factory – i cant see the solder or any flux to see if it was re-fload. i wouldnt use the glue they have used, but whatever floads there boat, this glue might have some flex in it when heated up. i recomend products that cure at a high temp.

I’d love to know which Elites have the improved cooling, we got ours about a month ago. I’m hoping we got a new one, we had been looking for a while and not fining any anywhere, then all of the sudden (at the same time of the 3Yr RRoD warranty program announcement) they were pretty common. Hopefully (fingers crossed) they weren’t available while they were producing an distributing the upgraded units.

In any case, we have the unit sitting horizontally, elevated with minimum 10″ of clearance all around (open in front and 12″ in back) with a fan blowing across the back to remove the hot exhaust air.

Date of the Console?

Yes, I would really like to know what the manufacturing date on that console is too, seeing as I’m getting an Elite in a few days. Thanks!

Hi Ben,

Thanks for the info, but, I have to take issue with your “current state of affairs” when it comes to the console industry on a couple of points:

1. The reason there are no third party Wii games is because most of the industry was betting on the other two consoles.

http://www.gamespot.com/news/6175764.html?part=rss&tag=gs_news&subj=6175764

2. You sure do sound like a fanboy! Jumping from one console to another brand console does not automatically cure you from your unfortunate affliction. 😛

It just means you’re a less discriminating fanboy.

Keep up the good work… love the laptop!

Hey are they still using the X clamp on there consoloes. Im getting mounts made that will improve the way in which will solve the issue with the PCB bowing!! if it gets hot fine but if there was not pressure on the board and the cpu was mounted better then alot of the issues would be soloved!! also if they did the same thing as ps3 and put a fan even at a low speed to get nice cool air in where the cpu is i bet you there would be no issues with xbox360. Im going to be running some test and prob publish them on my website:)

I’ll answer some of these questions in the next post (which is for podcast 25).

Ben could you post the born on date and or lot number and team?

the xbox definitely has a way to go. It needs to have integrated hd dvd support. Lets be honest, the more features that the xbox can offer, the better.

One thing is certain Benheck, your xbox laptop is genius. I think you should redesign the entire xbox, improve everything and make M$ pay you for it. If bill would get his head out of his joke r&d dept’s ass and wake up, he would see that he could rip the pants off of Sony in an instant if he’d listen to his end consumers. Sony is riding out their name, and they will charge what they charge because they know we are dumb enough and wealthy enough to buy it. If not the xbox should also aim to have blu ray support! if you cant beat em……

good luck Ben.

I have the ring of death on my 360…. sending back via their ups label. I probably should include photogtraphs of my 360 and serial # photo. Anyhow read all of your article. Thank you for all of the information.

ps had my 360 15 months but do not play very often. less than 3hrs a week.

What does the underside of the air shroud look like? I would want a partition in it so that one fan directly blows air to the gpu and the other fan directly blows air to the cpu. Why wasn’t the shroud extended to cover at least 1/2 of the cpu and gpu heatsinks, thereby ensuring cooling? (If not completely enclosing them and extending to the exhaust, thereby guaranteeing that the air will flow over the heatsinks.) A third fan would then be necessary to cool the other internal components. Why isn’t a laptop dvd player (slim) used instead of a full dvd desktop model? Afterall, they are already using laptop HDs. Lastly, why weren’t the larger holes retained? Smaller holes will likely clog up faster due to dust, thereby causing the unit to heat up more. It would have made more sense to use the wire fan covers on desktop power supply fans, which ensure the greatest air flow possible.

whoopdy do i can leave a 360 on for more than 5 hours without a freeze… i dont wanna knock this system as i have all (wii ps3 and 360) but each has thier downs… and so what the ps3 doesnt have good games(well thats about to change over the next few months)and to me thats the only downside oh did i mention ive left my system on for close to 3 days on folding at home and no freeze or heat problems yet… after gears of war my 360 just collects dust and no im not looking forward to halo 3 its overrated i got bored a 1/4 of a way into halo 2… by the time microsoft gets its system right as an owner it will be way too late… they should just start working on a real next gen system cuz personally no one wants to say but this system has too many flaws and wont survive in the long run and im sorry with all the 360 problems ive had i really feel like ive wasted money… and yes the ps3 is worth the money even if u use it only as a bluray player dont knock something cuz u can afford it… and just to add my opinion on the wii its fun addictive at times CHEAP but thats why i dont consider it next gen (graphics)…

I just got my 360 back from repair, it was a swap meaning I did not get my unit back and it does not have the new heat sink on it, My new elite does that was amde in 07/07. I had one of the early ones that started freezing, then did the weird video stuff, the finally died 2 days after the new warrenty. I wonder how long this one will last!

I bought an Elite today, Lot #0726. I ought to be OK, right?

Further to my previous post:

Lot# 0726

Team: FDOU (I think it said…)

MFG: 06-30-07

(Plus I pried off the bottom bit and saw the new heatsink)

I’m not planning any marathon gaming sessions (I generally game 2-3 hours at a time), so I think I’ll be OK.

Hey Ben….and all you other smart dudes for that sake,

This is Freakin’ awesome. Your articles are all fantastic, as well as the comments that follow.

Keep up the good work

and please……..can i buy a 360 Laptop

I think the “hot glue” is there because the spent all there money on new hardware and soft ware. So they hod no choice but to cheepo the assembly.

LOL

-Mort

Dear Sir,

I want to buy the new heatsink of the XBOX 360.

Please help me contact to the vendor.

Thanks & Regard,

Kim Ngan

if u look here this is a console that is “Falcon” http://users.cescowildblue.com/jwsteed/images/heatsink2.jpg when do u think elites will have them?

ok, i have an idea for a cooling mod that should keep the red death at bay. (if you do it before the console dies) i wanted to replace the gpu heatsink with a standard cpu heatsink and have the dvd drive exteranl. i just need to know what the gpu socket size is. i can say for sure its not 775 i bought a socket 775 heatsink and it was too big. does anyone know the socket size?

“But I’m no brain scientist”. The sentence is from the movie Back To The Future. It’s either 1,2 or 3, I don’t remember. Just want to say that you have a nice web page. I’ve learned quite a bit, and I think your works are great.

Torkiliuz.

I believe the “mystery glue” is probably a substance called RTV. I use it all the time in the electrical engineering field whenever I have a component I need to stay still on a board.

Hi! I need your help…I wanna change my DVD drive (in my xbox 360 Elite)

Do you think this dvd drive will work on my xbox —-> “Samsung SH-S223F SATA Bulk” ??

looks like a well designed piece of kit probany works better than the older ones hopefully.

Heey again maan, can you make a video like “How to flash Xbox 360”

please man…

I have opened about 30 (to fix Red Rings) just opened my first Elite today though… This one has a different CPU heat sink.. also the metal that is used on the new GPU heat sinks. It doesnt have that copper tube running through it anymore either.. hmmm. I have pics if you’re interested.

The glue or thermal adheasive is the problem with the poor heat transfer. Once the system gets used long enough and the expanding and contracting of the heat sinks the glue will eventually come loose from the heat sinks thus creating a gap between the chip and heat sink. Removing the glue and using a thermal grease and attatching the heat sinks to the chips better would solve the heat transfer problem. I have 18 years experience working with and building almost every type of heat transfer products for electronics cooling with Thermacore Inc. If you have any questions you can E-mail me at disturbeddesign@yahoo.com

Hey I was just curious when you took it apart, did you have xclamps on the back of the motherboard? I took mine apart and didn’t, but all the videos indicate that there is xclamps on the back. Just crious if that is a difference between the xbox 360 elite and the regular xbox360?

2 of them had the x clamps but, one did not. I found out latter that the one missing the x clamps had been worked on once before and were probably removed then. As far as I know there is no difference between the different xboxes except the addition of a heat piped based heat sink on the GPU which will not solve the over heating problem since they are still using the glue type thermal paste and the poor x clamps. Hope this helps.

ONE DAY MY XBOX 360 ELITE SUDDENTLY TURNED OFF, I TOUGHT IT WAS THE POWER SUPPLY AND TRIED WITH ANOTHER BUT THE CONSOLE CAN NOT TURN ON. NEVER APPEAR THE RED LIGHTS (RROD). ANY IDEA OF WHAT IS THE PROBLEM AND IF IT CAN BE SOLVED? THANKS.

i have my elite holiday bundle lego batman and pure and works smoothly no problems no rings and had it for a year now this helped me out alot but still does having limits for how long you play help from getting the red ring of death?

Boo to microsoft there xbox 720 once a game has been played on one xbox you cant play on a nother bad hay

i had my xbox for a week we had to send it to amd becase the amd chip would not function

xbox suks im a ps3 & 4 guy